Key considerations for the ethical use of people data

Catherine Tansey – Oct 17th, 2023

The ethical use of workforce data is about more than compliance. It's an essential practice to build and support trust between employees and employers. Here's what HR and DEI leaders should consider.

People data has the potential to support more equitable, high-performing workplaces that benefit workers and organizations—when used fairly and ethically.

Yet while most companies are eager to leverage their people data to support performance gains by investing in data scientists, building data lakes, and more, less emphasize the need or commitment to do so ethically.

Recent research published in the journal Personnel Review analyzed the existing literature on people analytics and found the “...near absence of ethical considerations in the corpus of academic, grey and online literature, despite the significant risks to privacy and autonomy these innovations present for employees.”

For organizations, ethical use and treatment of workforce data shouldn’t just be about remaining compliant—it’s also an essential practice to build and support trust between employees and the company.

As HR and DEI leaders, it's your job to advocate for ethical employees data use, designing and enforcing principles to govern employee data use across the org. Here’s what to consider.

Ethical data collection

Today’s data sources stretch far and wide. Employers can collect data from the obvious sources, like employee engagement surveys or pulse surveys; gather information from covert sources, like keystroke actions; or mine data from publically available professional data, like that found on LinkedIn or personal websites.

Companies collect data for myriad purposes, and while people data has the potential to transform workplaces, positive intentions are not sufficient to ensure companies ethically handle data.

Consider the retailer Sports Direct, which asked employees to rate their satisfaction with the company upon arrival for a working shift by pressing either a happy- or sad-face emoji button. The Guardian’s reporting on the company revealed that workers who opted for sad-face emoji were then forced to meet with managers to explain their dissatisfaction.

82% of workers surveyed say it’s important that employers view them as individuals, and not just employees, yet only 45% agree that their company actually does.

Missteps like this by companies annihilate trust and credibility with their workforce—whose cooperation is needed to make meaningful strides in creating a diverse, equitable, and people-centric place of employment.

Gartner research shows that 82% of workers surveyed say it’s important that employers view them as individuals, and not just employees, yet only 45% agree that their company actually does.

Companies can do a lot to avoid the perception that employee data simply serves to enable the machinations of the corporation. Ethical data treatment is a must, but so is asking for the right data. Take voluntary self-identification campaigns (self-ID), for example. These are considered essential to the ability of a company to do meaningful work with DEI analytics, and yet some companies fall flat in their efforts.

To boost participation, HR and diversity leaders can do a few things. One, involve employee resource group (ERG) leaders in building the self-ID campaign. They can support HR leaders in ensuring the right questions are asked and in the right verbiage.

Two, emphasize the reasons you’re requesting this information, and tie it back to your company values. You could write, “Employee data helps us see the unique challenges that various identity groups face within our organization. We are committed to using this highly personal data to foster a fairer, more inclusive workplace for everyone, particularly individuals who have been historically marginalized and overlooked.”

Read more about voluntary self-identification campaigns in our part I and part II guides.

Security comes first

While companies may be tempted to rely on regulations like GDPR and CA’s Consumer Protection act to protect employee data, these don’t reach deep enough to ensure ethical data use. What’s more, “Employee data that informs and improves a company’s core operations can be exempt from regulatory constraints,” write Dorothy Leidner, Olgerta Tona, Barbara H. Wixom, and Ida A. Someh in their MIT Sloan article Putting Dignity at the Core of Employee Data Use.

It can be easiest to comprehend the need for data security when we consider an individual’s data; this is highly personal information for which individuals, from an ethical (and often legal) standpoint, deserve the right to privacy and security. Yet the need for ethically secured systems takes on new weight when we consider merged people datasets.

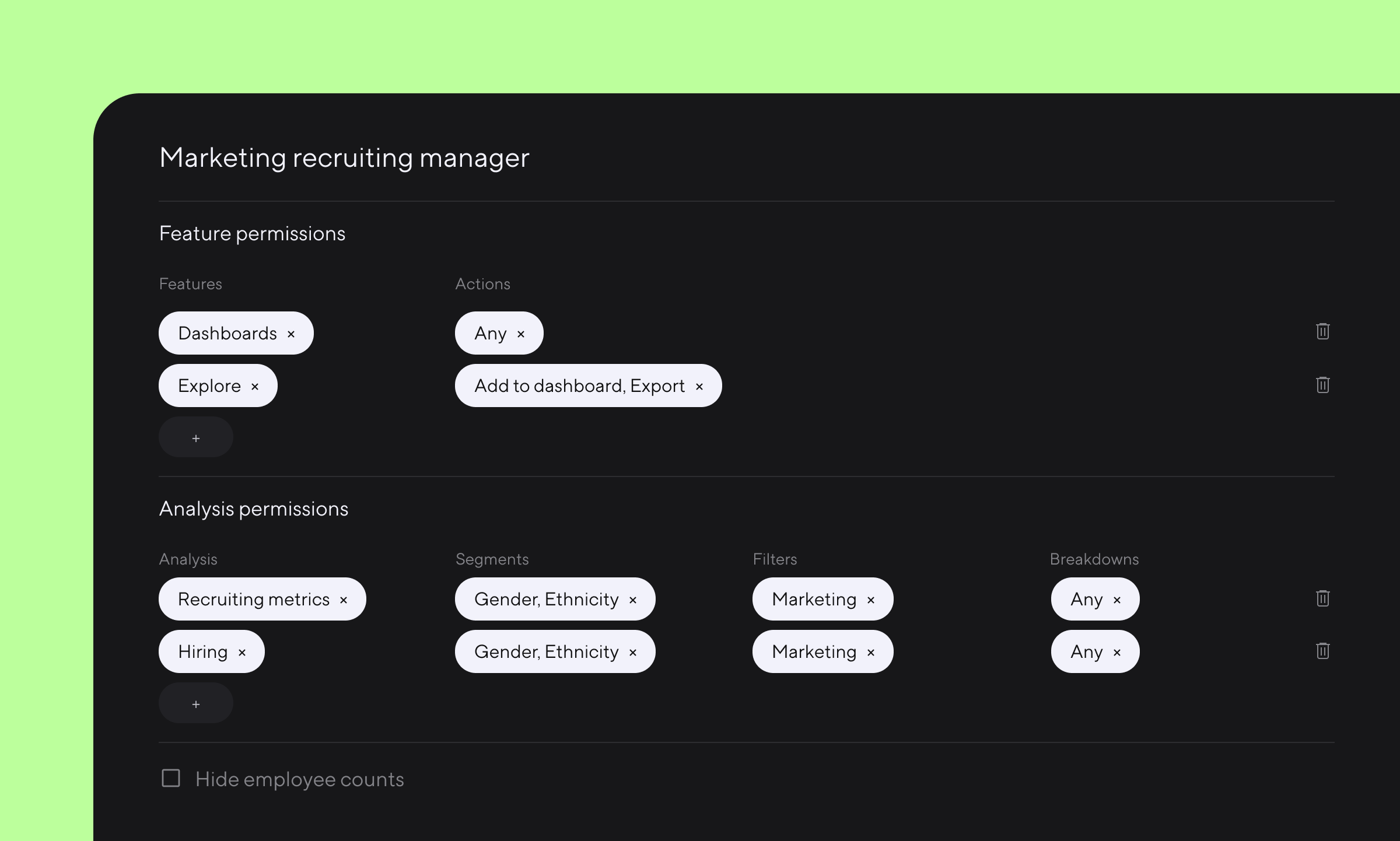

For people data to be impactful, it needs to be shared in a responsible and ethical manner. Companies need systems that allow for nuanced differentiation of access based on user roles so they can strike the right balance between visibility and privacy.

“What happens when [one] individual’s data is put in relation with another employee’s data to tell a whole new story? This combining of data eventually leads to ‘things being inferred about us’ which ‘can have a range of effects on our personal agency and more broadly on society as a whole,’” write Waliyah Sahqani and Luca Turchet in Co-designing Employees' Data Privacy: a Technology Consultancy.

Despite the obvious need for data security, at many companies, people data is scattered between spreadsheets or people analytics platforms, or left vulnerable in another form. These options don’t offer sufficient security or granular-level user permissions, so access tends to be limited to very few people.

But for people data to be impactful, it needs to be shared in a responsible and ethical manner. Companies need systems that allow for nuanced differentiation of access based on user roles so they can strike the right balance between visibility and privacy.

Inaccurate data is unethical

Clean, accurate data is essential to ethical data use. Since companies rely on people data to make hugely-consequential decisions around hiring, promotions, compensation, and more, it’s imperative for companies to be confident about the accuracy of their data—not just from an ethical standpoint but a legal one, too.

Alongside the arrival of generative AI’s everyday use in 2023, fears of these systems’s potential to perpetuate bias and inequity have ushered the conversation on data accuracy to center stage. While companies far and wide are already using generative AI-algorithms across their orgs with seemingly little oversight, the EEOC hasn’t minced words reinforcing organizational responsibility for any discrimination that ensues.

HR and diversity leaders should work cross-functionally to vet technology solutions that enable flexible data collection, store data securely, and protect it with role-based system access.

At companies where biased data is used to feed algorithms, data is sold without consent, or is otherwise mishandled, board members could potentially be held accountable under the Caremark Law, too.

The best bet for companies when it comes to ensuring data accuracy is to implement systems to collect accurate data from the start, rather than trying to correct errors later on. HR and diversity leaders should work cross-functionally to vet technology solutions that enable flexible data collection, store data securely, and protect it with role-based system access.

Transparency builds trust

When it comes to employee data, companies must consider how much to share, with whom, and when. Between sharing nothing—near-universally condemned as unacceptable by employees—to sharing everything, which poses legal risks and ethical concerns, companies must find middle ground.

Generally speaking, employees want (and deserve) access to more of the information companies are storing on them. What’s more, being transparent about data collection and use tends to benefit companies in the long run.

Garnter’s 2021 Q3 survey showed that a company’s transparency policies would influence job choice: 70% of employees said they would take one job offer over another based on the org’s “transparency practices,” yet only one-third of companies “practice true information transparency.”

Employees have the right to understand what data is being collected about them, how it’s being used or will be in the future, and how to access that data, and as advocates for employees, it’s up to HR and diversity leaders to underscore this point in the C-suite and boardroom.

The pressure for companies to treat data ethically will only build. Consumers, employees,and—increasingly—governments, are mandating more responsible data collection, use, and storage, and rightfully so.

Each organization will have to consider what ethical data use means for them, but along with other stakeholders like legal and IT, HR and DEI leaders should be part of shaping these policies for the company.

Book a demo to learn how Dandi makes it simple and secure to collect, analyze, and share people data—across your entire company.

More from the blog

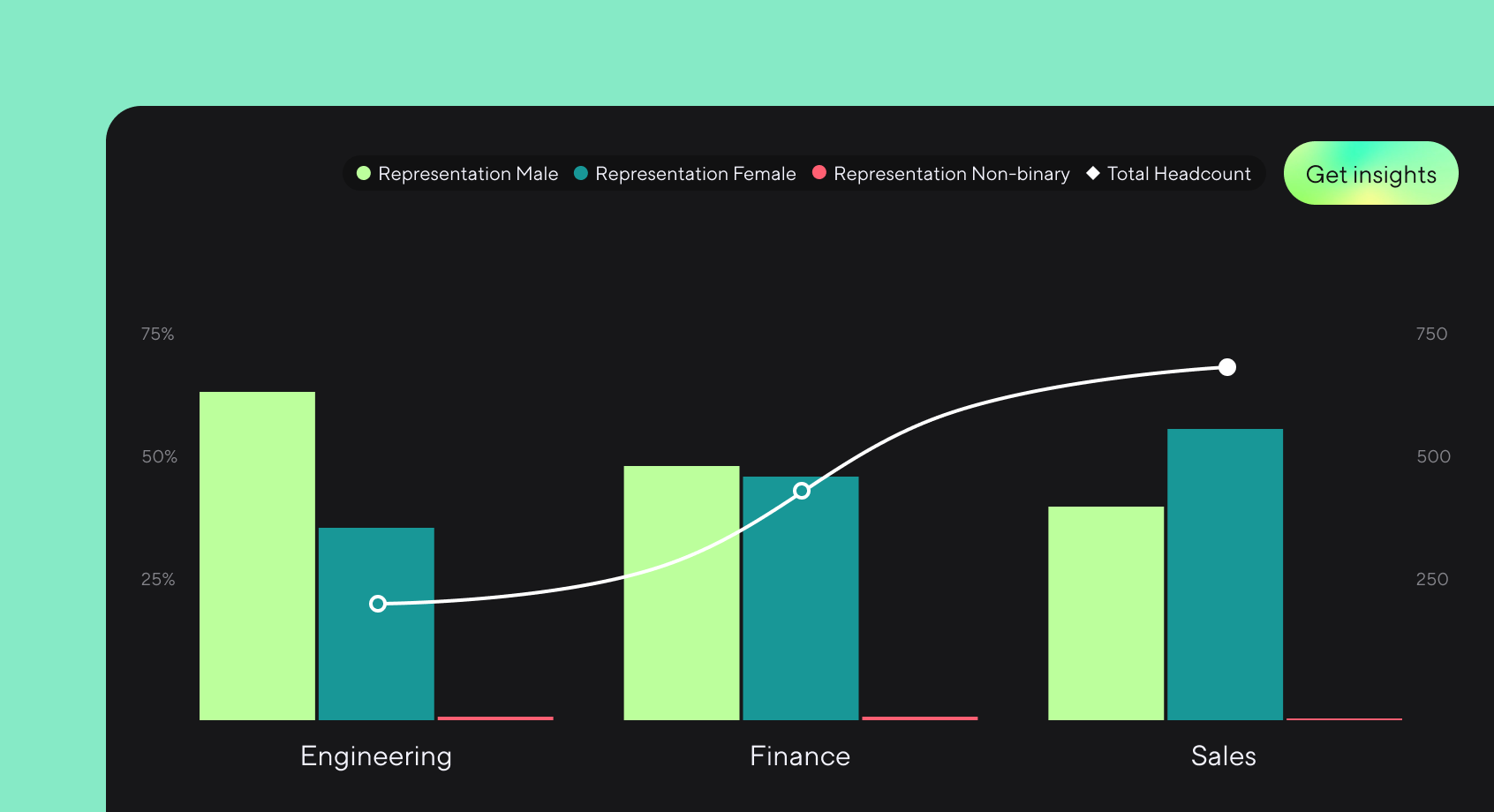

Announcing more powerful Dandi data visualizations

Team Dandi - Oct 23rd, 2024

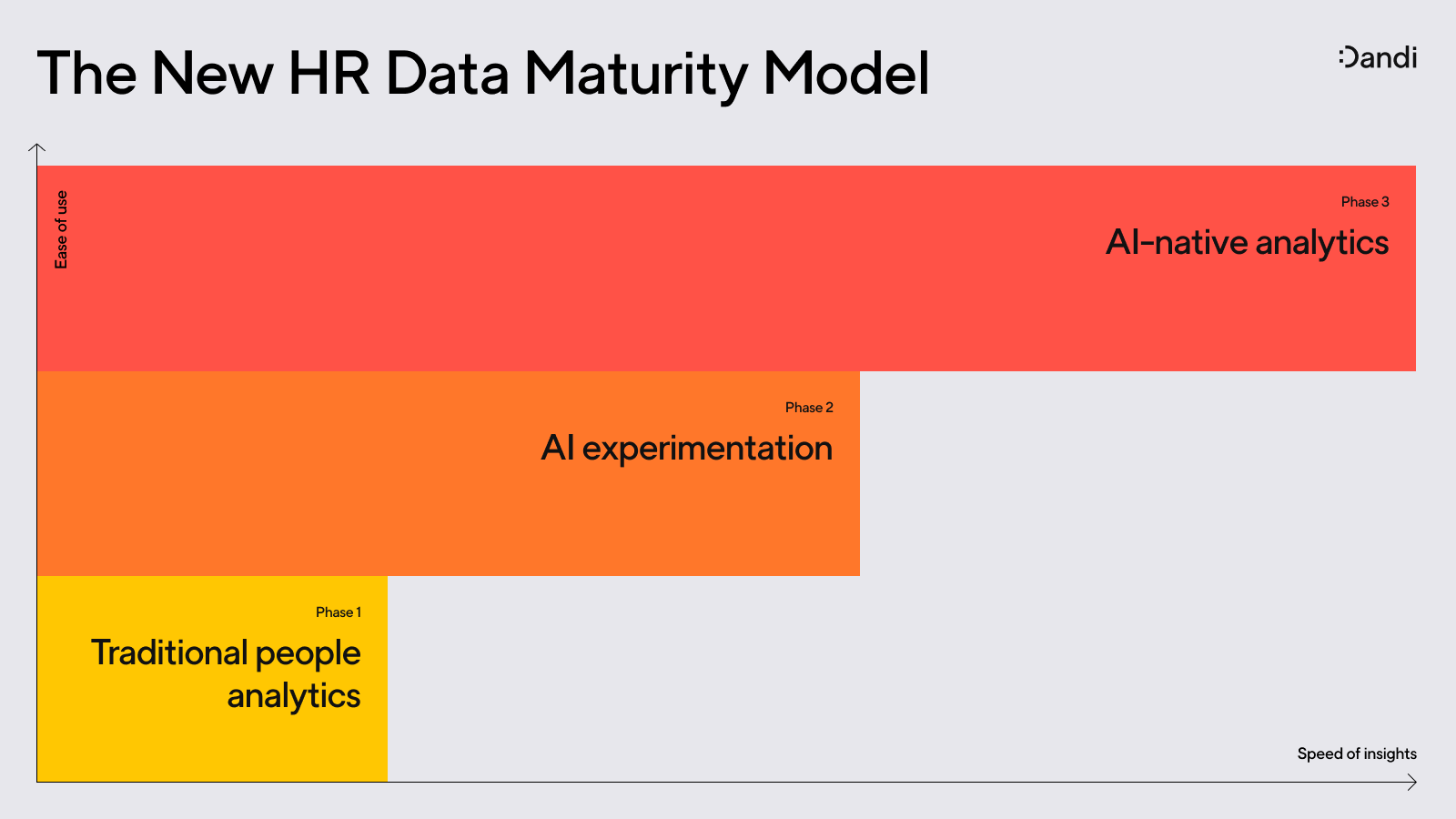

The New Maturity Model for HR Data

Catherine Tansey - Sep 5th, 2024

Buyer’s Guide: AI for HR Data

Catherine Tansey - Jul 24th, 2024

Powerful people insights, 3X faster

Team Dandi - Jun 18th, 2024

Dandi Insights: In-Person vs. Remote

Catherine Tansey - Jun 10th, 2024

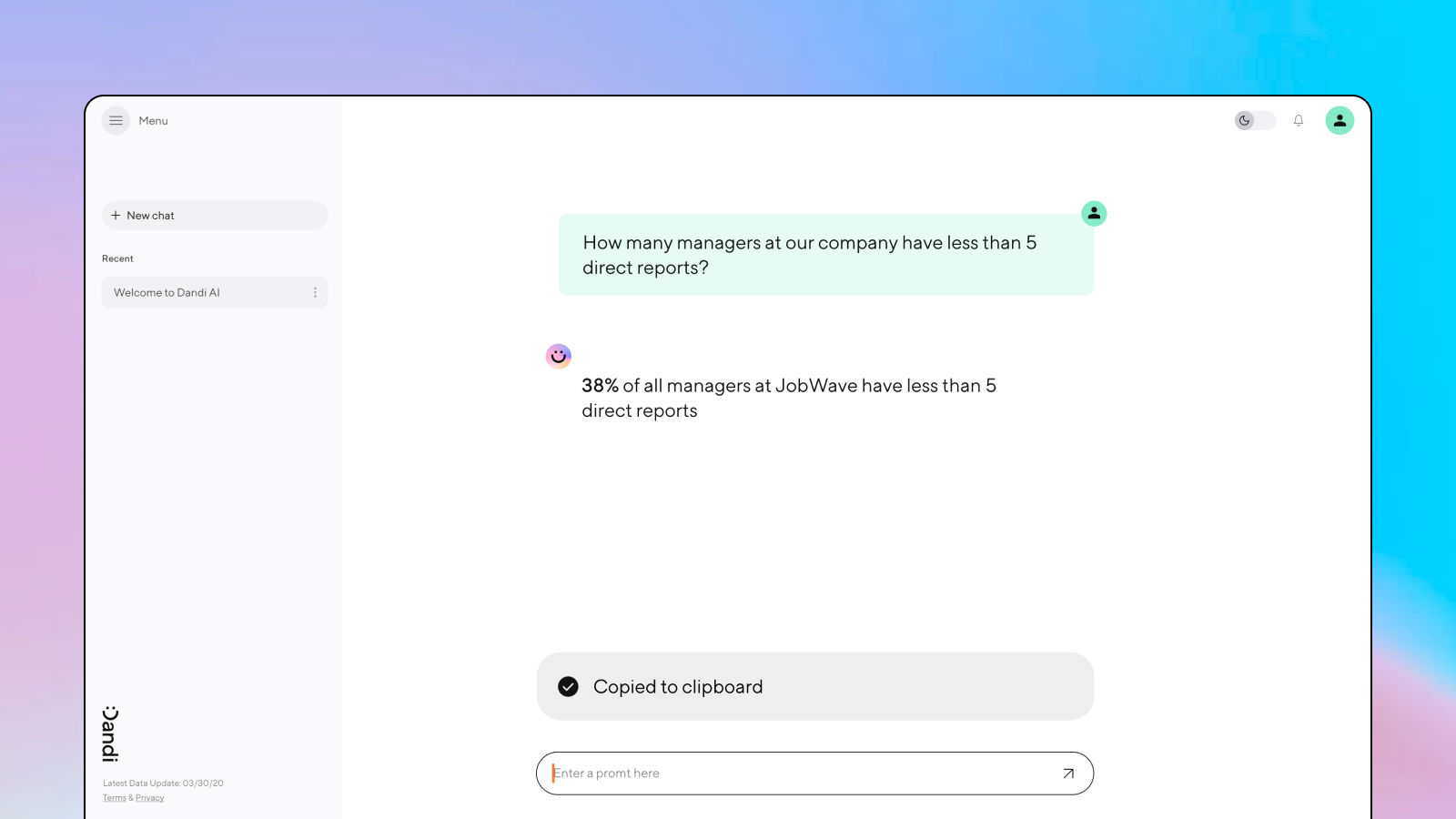

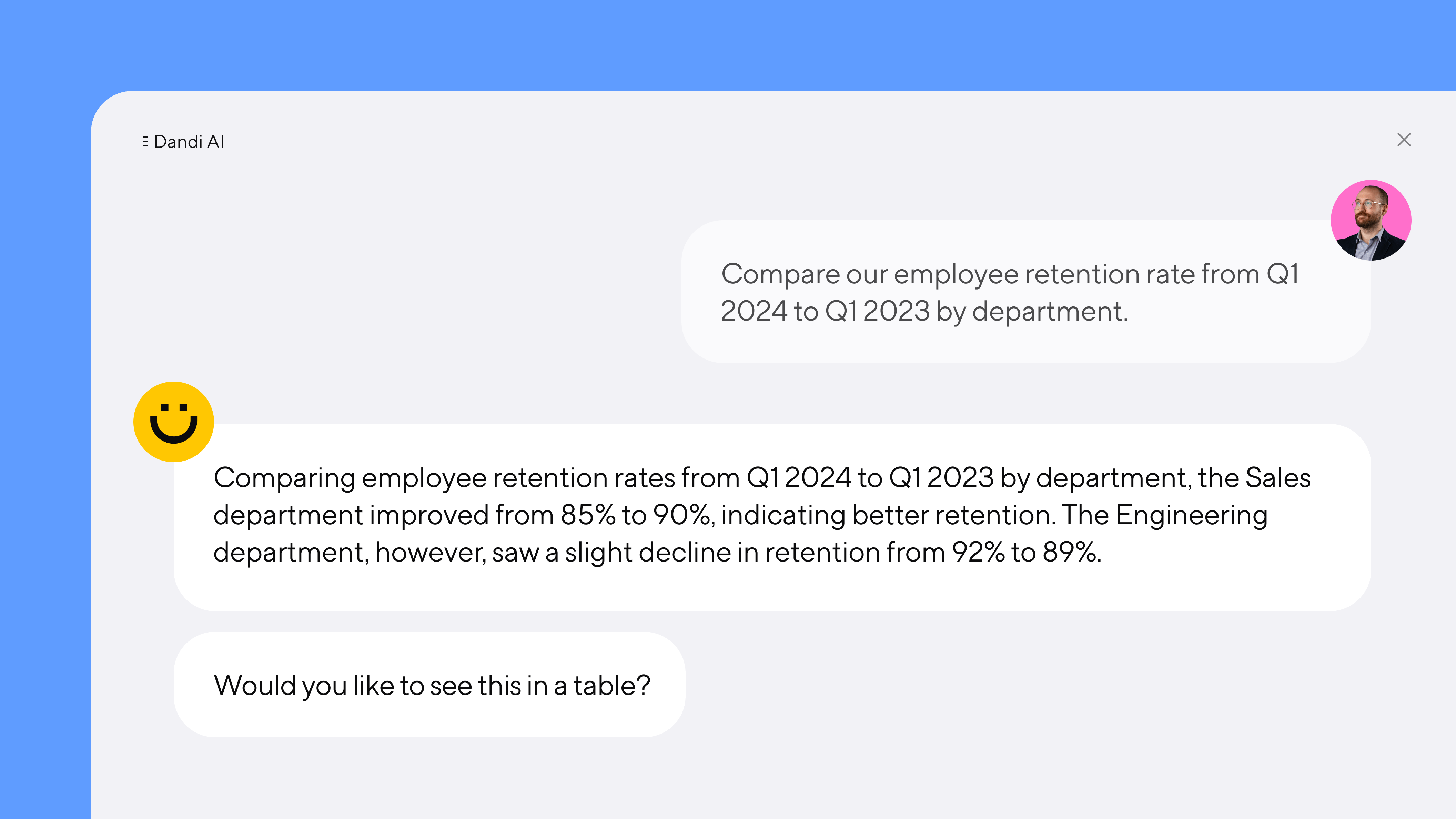

Introducing Dandi AI for HR Data

Team Dandi - May 22nd, 2024

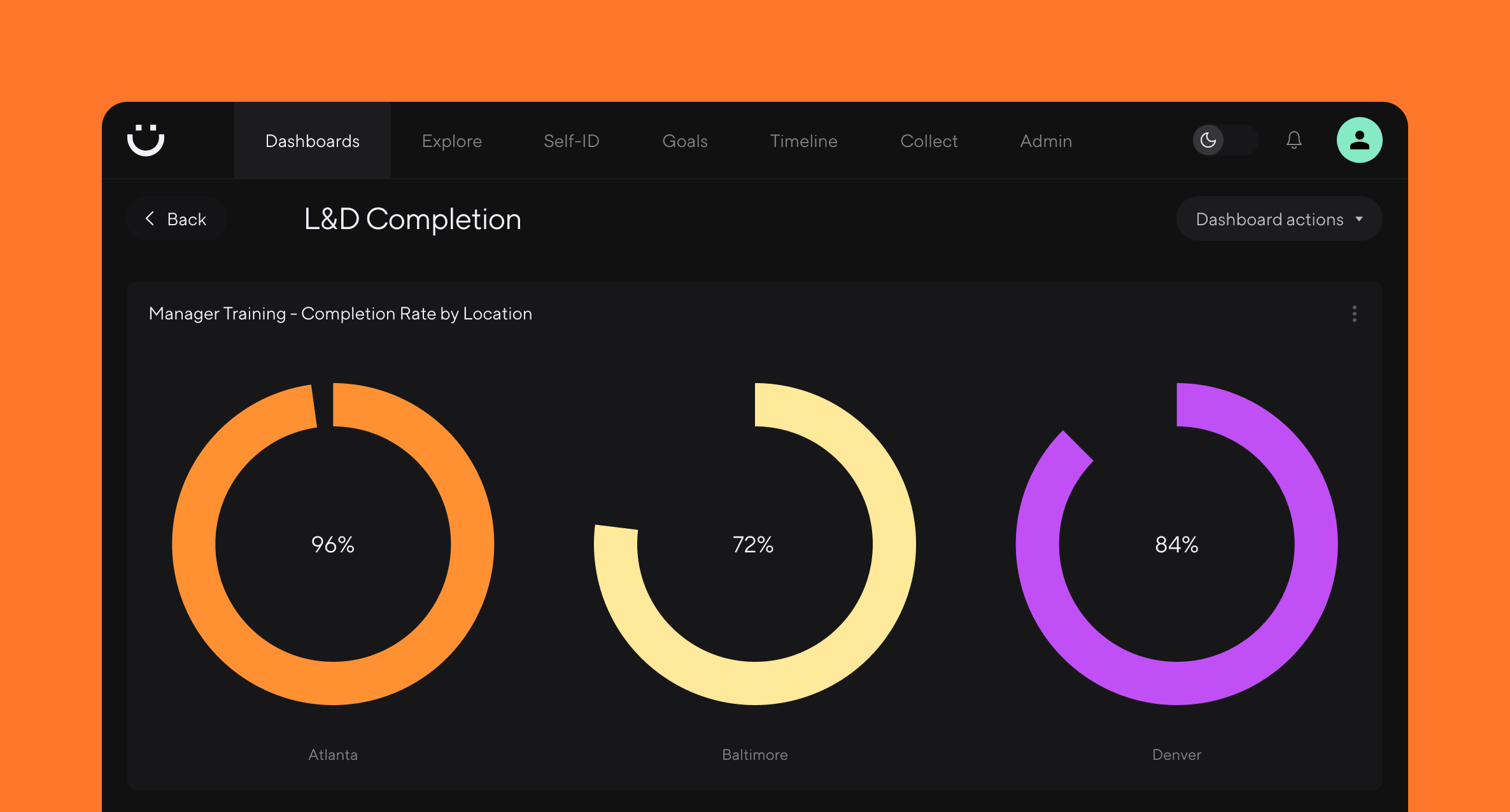

5 essential talent and development dashboards

Catherine Tansey - May 1st, 2024

The people data compliance checklist

Catherine Tansey - Apr 17th, 2024

5 essential EX dashboards

Catherine Tansey - Apr 10th, 2024

Proven strategies for boosting engagement in self-ID campaigns

Catherine Tansey - Mar 27th, 2024